4.1 Overview

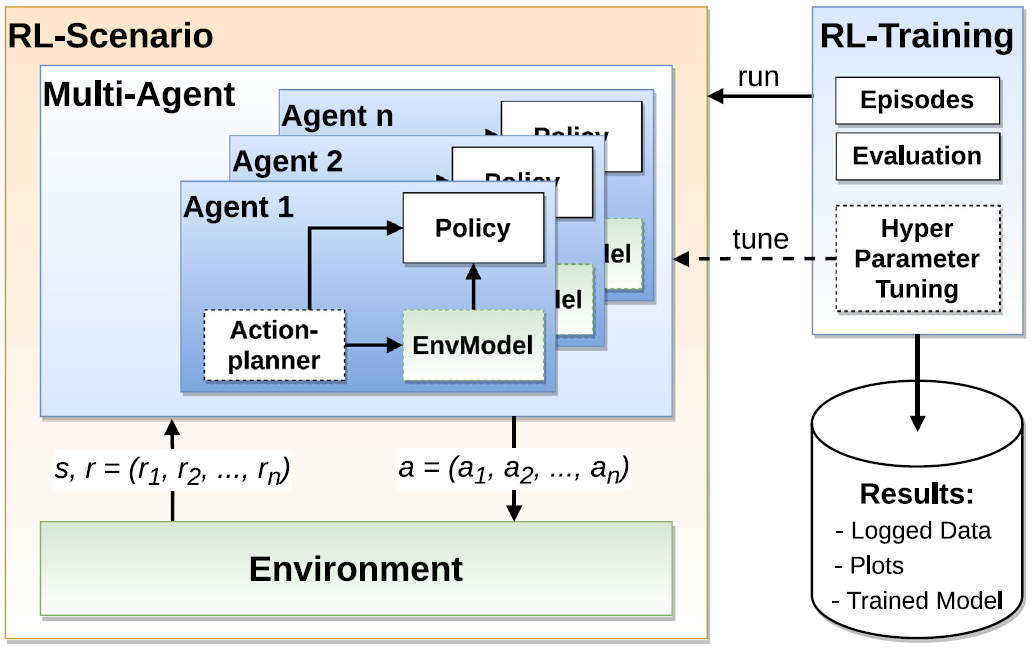

MLPro-RL is the first ready-to-use subpackage in MLPro. MLPro-RL provides complete base classes of the main reinforcement learning (RL) components, e.g. agent, environment, policy, multiagent, and training. The training loop is developed based on the Markov Decision Process model. MLPro-RL can handle a broad scope of RL training, including model-free or model-based RL, single-agent or multi-agent RL, and simulation or real hardware mode. Hence, this subpackage can be a one-stop solution for students, educators, RL engineers or RL researchers to support their RL-related tasks. The structure of MLPro-RL can be found in the following figure.

This figure is taken from MLPro 1.0 paper.

Additionally, you can find the more comprehensive explanations of MLPro-RL including a sample application on controlling a UR5 Robot in this paper: MLPro 1.0 - Standardized Reinforcement Learning and Game Theory in Python.

If you are interested to utilize MLPro-RL, you can easily access the RL modules, as follows:

from mlpro.rl.models import *

You can also check out numerous ready to run examples on our how to files or on our MLPro GitHub. Moreover, a technical API documentation can be found in the appendix 2.